vRealize Automation 8.2 - Terraform Configurations

vRealize Automation (vRA) 8.2 added support for running your Terraform (TF) configurations directly from the vRA interface. Simply register your code repository endpoint, either GitLab or GitHub, and select which Terraform configuration to run. The vRA logic will even map the deployed resources to your vRA Cloud Accounts, Cloud Zones, and deployed resource objects. This functionality is fantastic to extend the capabilities beyond the supported resource types. You could use the TF configurations to deploy an F5 load balancer, an Azure and AWS VM, as well as creating your Grafana dashboards and registering your Datadog agents all from a single configuration.

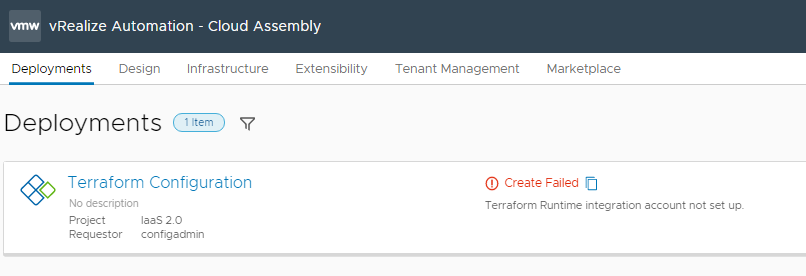

I was very excited to take a look at this, and an issue soon popped up. Instead of vRA running the Terraform code directly from the vRA appliance, you need a separate Kubernetes (k8s) cluster configured as a Terraform runtime environment. Without it, you will get the "Terraform Runtime integration account not set up." error message.

But isn't vRA 8 itself built on Kubernetes? Why can't we just run this locally?

Whilst it 100% is not supported by VMware GSS, let's take a look at how to configure an external Terraform runtime environment this is actually the vRA internal Kubernetes environment.

Log into your vRA appliance as "root" and run the command below.

1cat /etc/kubernetes/admin.conf

Copy the output to a notepad and we will use this shortly.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <

server: https://vra-k8s.local:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: <

client-key-data: <

Not the server field currently referenced the internal k8s hostname which is not accessible outside of the internal cluster. Update the server value with your fully-qualified domain name, keeping the 6443 port, just like the example below.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <

server: https://vra8.homelab.local:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: <

client-key-data: <

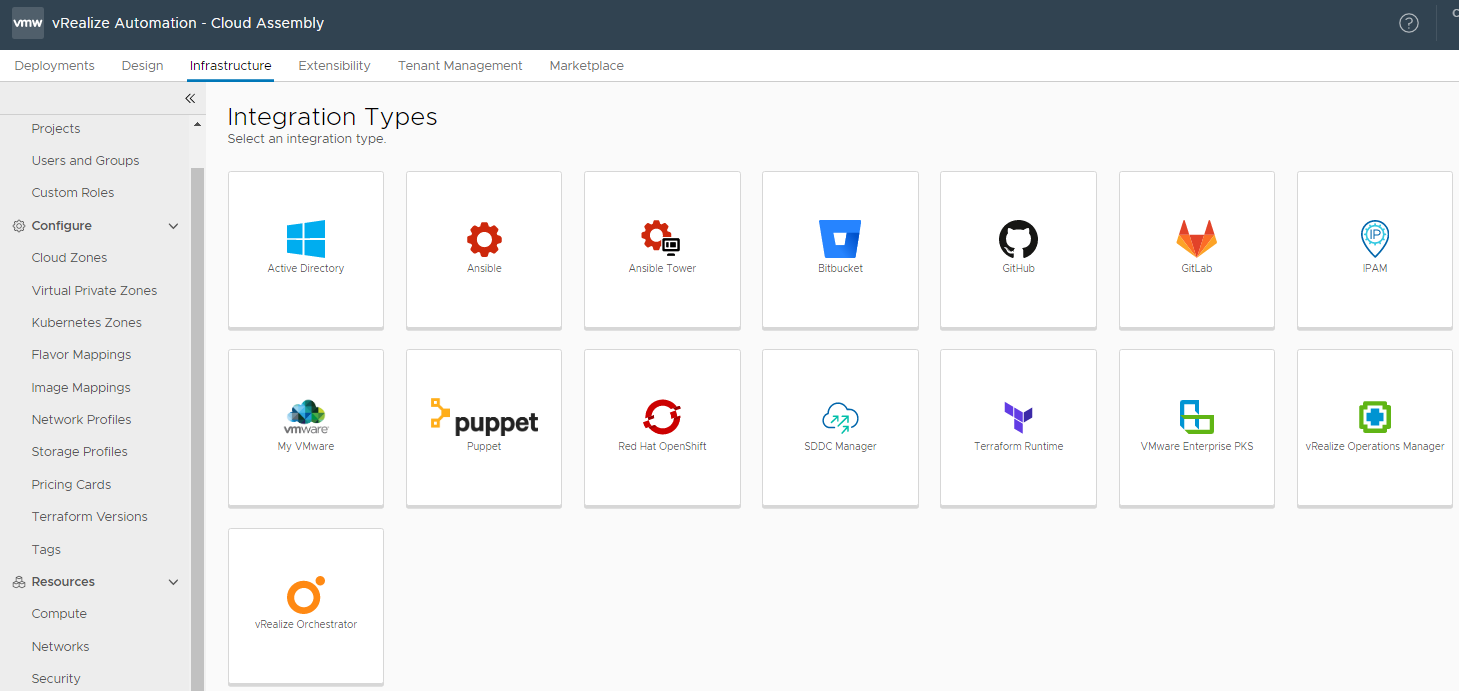

Log into your vRA environment and navigate to Cloud Assembly > Infrastructure > Connections > Integrations and select Add Integration > Terraform Runtime.

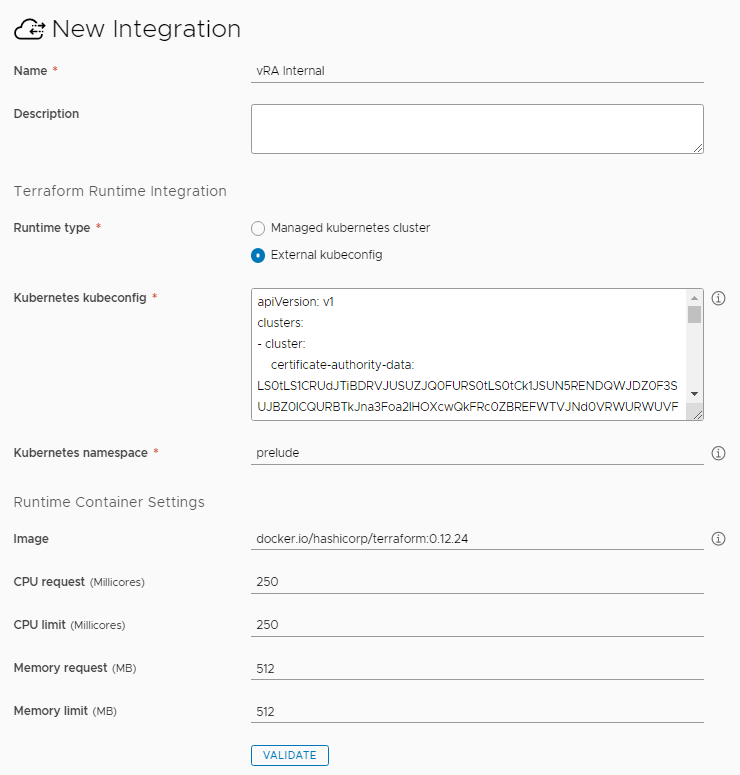

Provide a name, such as "vRA Internal". Select "External kubeconfig" as the Runtime type, and paste in your updated configuration from above to the "Kubernetes kubeconfig" field. Make sure you haven't removed any values, and you have the updated external hostname.

The vRA services run under the "prelude" namespace internally, so we will utilise the same namespace. Enter "prelude" as the "Kubernetes namespace".

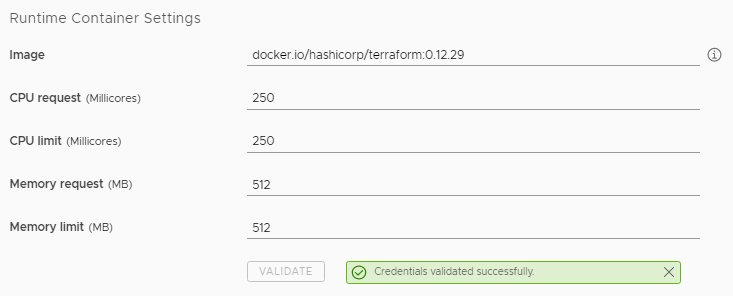

Here you can also update the Terraform image version you wish to use. For my environment, I will use the latest version 12 build which is 0.12.29. You can also alter the configuration to increase the amount of CPU and Memory available to the Terraform pod. Validate your configuration and select Save.

Note that the Terraform image will be retrieved each time a Terraform configuration is run, as the pod is dynamically created at runtime and destroyed once the resources are provisioned. Your vRA appliance must have direct access to the internet to retrieve the terraform docker image. The implementation, unfortunately, doesn't respect the proxy configuration set in "vracli proxy", so if you require a proxy to reach the internet, this setup will not work. Time to build a dedicated Kubernetes cluster...

Hopefully, a future release of vRealize Automation will allow you to run this internally natively and it will work with a proxy for access to the docker repository. For now, the only supported way is to deploy a Kubernetes cluster...